We are conducting research in several funded projects. You can find more information about Reinforcement Learning for Automated Flowsheet Synthesis of Steady-State Processes below. If you are interested in more details and discussions about our projects, do not hesitate to contact us.

Project Description

Flowsheet synthesis is a central element in the conceptual design of chemical processes. By nature this process is highly creative, and hard to formalize for automation. Prevalent methods of computer-aided flowsheet synthesis however are formalized algorithms using knowledge-bases rules for choosing between alternative planning steps, often stemming from superstructure optimization.

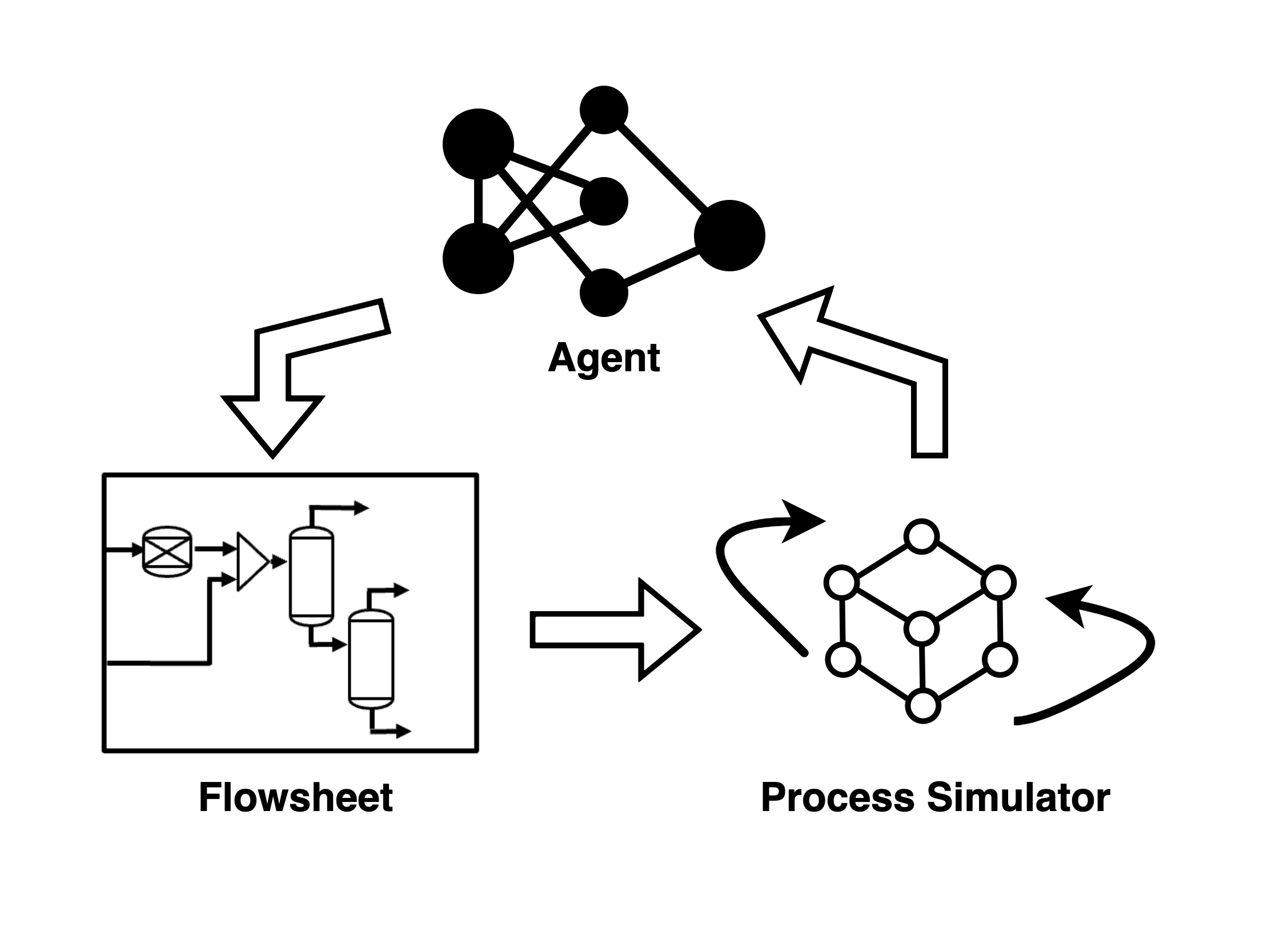

The aim of this research project is to use modern reinforcement learning (RL) methods for automated but creative flowsheet synthesis of steady-state chemical processes. Here, a learning agent sequentially generates process flowsheets by interacting with a process simulator, which provides feedback on the quality of the generated flowsheets based on a cost function. The agent has no prior knowledge of chemical engineering and learns exclusively through this automated interaction with the simulator. Only the process simulator contains the a priori available physical knowledge, i.e. physical-chemical material properties and a series of general apparatus models.

The central working hypothesis is that this approach is capable of independently generating feasible process flowsheets which are nearly optimal with respect to a given cost function.

The project is thus divided into two closely intertwined parts: On the one hand, robust and fast simulation environments for specific problems of interest must be developed and implemented. For this purpose, simplified shortcut and surrogate models for the apparatuses are first used (Burger Group, Chemical and Thermal Process Engineering, Technical University of Munich). On the other hand (Grimm group, Bioinformatics and Machine Learning, University of Applied Sciences Weihenstephan-Triesdorf and Technical University of Munich, Campus Straubing), new RL methods must be developed to overcome the combinatorial challenges of flowsheet synthesis: the planning horizon as well as the number of possible planning decisions (discrete and continuous) are extremely large. In addition, it is unclear a priori how to evaluate unfinished flowsheets amid such a sequential synthesis, which makes it difficult for the agent to learn.

In order to better address these challenges, the problem of flowsheet synthesis is transformed into a competitive two-player game. This leads to a generalized formulation and analysis as a combinatorial planning problem and allows the application of algorithms developed for complex highly combinatorial game applications (Chess, Go).

Publications

Identification of Pressure-Swing Separation Processes for Azeotropic Mixtures Using Deep Reinforcement Learning

WB Wolf, J Pirnay, Q Göttl, DG Grimm, J Burger

Chemie Ingenieur Technik, 2025

(https://doi.org/10.1002/cite.70032)

GraphXForm: Graph transformer for computer-aided molecular design

J Pirnay*, JG Rittig*, WB Wolf*, M Grohe, J Burger, A Mitsos, DG Grimm

Digital Discovery, 2025

(https://doi.org/10.1039/D4DD00339J) [Code]

Deep reinforcement learning enables conceptual design of processes for separating azeotropic mixtures without prior knowledge

Q Göttl*, J Pirnay*, J Burger**, DG Grimm**

Computers and Chemical Engineering, 2025

(https://doi.org/10.1016/j.compchemeng.2024.108975) [Code]

Self-Improvement for Neural Combinatorial Optimization: Sample Without Replacement, but Improvement

J Pirnay, DG Grimm

Transactions on Machine Learning Research (TMLR), 2024 (https://openreview.net/forum?id=agT8ojoH0X) [Code] Awarded with “Featured” Certification

Take a Step and Reconsider: Sequence Decoding for Self-Improved Neural Combinatorial Optimization

J Pirnay, DG Grimm

European Conference on Artificial Intelligence (ECAI), 2024 (https://doi.org/10.3233/FAIA240707) [Code, arXiv]

Convex Envelope Method for determining liquid multi-phase equilibria in systems with arbitrary number of components.

Q Göttl, J Pirnay, DG Grimm, J Burger

Computers and Chemical Engineering, 2023, () [Code]

Policy-Based Self-Competition for Planning Problems.

J Pirnay, Q Göttl, J Burger, DG Grimm

International Conference on Learning Representations (ICLR), 2023

(https://openreview.net/forum?id=SmufNDN90G) [Code]

Using Reinforcement Learning in a Game-like Setup for Automated Process Synthesis without Prior Process Knowledge

Göttl, Q., Grimm, D. G., & Burger, J.

Computer Aided Chemical Engineering, 2022, 49, 1555–1560 (10.1016/B978-0-323-85159-6.50259-1)

Project Information

Project title

Reinforcement Learning for Automated Flowsheet Synthesis of Steady-State Processes

Project Partners

- Technical University of Munich, Chemical Process Engineering

Project Coordinator: Prof. Dr. Jakob Burger

Project Advisor: Quirin Göttl - Weihenstephan-Triesdorf University of Applied Sciences & TUM Campus Straubing for Biotechnology and Sustainability

Project Coordinator: Prof. Dr. Dominik Grimm

Project Advisor: Jonathan Pirnay

- Technical University of Munich, Chemical Process Engineering

Funding

The project is supported by funds of the German Research Foundation (DFG).